In the digital era, data centers are not just buildings filled with servers – they are the living nervous systems of the cloud. Every transaction, online game, AI inference, and streamed video passes through the intricate network fibers and switching fabrics that make up these vast infrastructures. Yet as the complexity of data centers has scaled exponentially, so too has the challenge of managing their invisible circulatory system: the network.

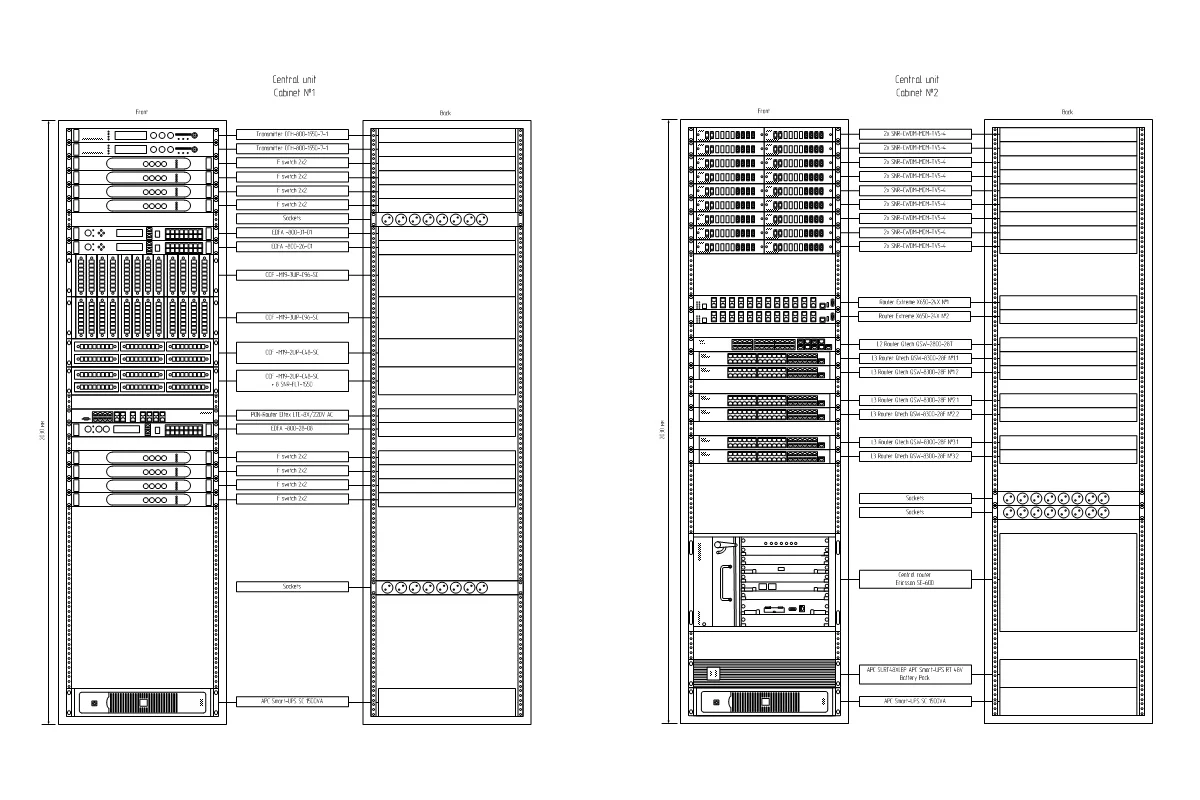

To operate reliably at scale, a data center must integrate four disciplines seamlessly: physical network management, Network Operations Center (NOC) management, fiber analytics, and Data Center Infrastructure Management (DCIM). Each plays a distinct but interdependent role. When unified, they form an intelligent, self-observing system – one capable of maintaining near-zero downtime while optimizing performance and energy use.

From physical fiber to logical flow

Although networks are often described in abstract terms – routing tables, VLANs, IP subnets – their reality is deeply physical. Every data flow depends on the microscopic precision of fiber alignment and the macroscopic design of conduits, patch panels, and switch fabrics.

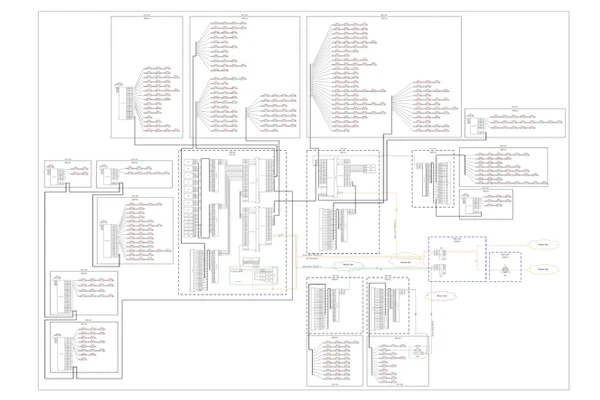

Modern data center network management therefore operates across multiple layers. Logical telemetry (such as SNMP, sFlow, and routing data) must be correlated with physical telemetry from optical sensors, OTDR traces, and temperature monitors. Only through this union can operators distinguish between a logical fault (like a BGP flap) and a physical fault (such as a micro-bend in a fiber strand).

This fusion of data layers turns network management into a kind of systems biology: a science of interdependent feedback loops. Metrics like availability, latency distributions, mean time to detect, and mean time to repair are not mere statistics – they are physiological indicators of the data center’s overall health.

NOC - a control center for digital homeostasis

At the operational core of every large data center lies the Network Operations Center (NOC) – a real-time command environment that blends human expertise with algorithmic precision.

A modern NOC functions much like an air traffic control tower. It continuously monitors thousands of sensors and alerts, performs triage, and initiates corrective actions before customers ever notice an impact. The effectiveness of a NOC is often measured by its Mean Time to Detect (MTTD) and Mean Time to Restore (MTTR) – the twin indicators of operational agility.

Artificial intelligence and automation have already transformed NOC workflows. Routine incidents are now triaged automatically, runbooks are converted into machine-readable playbooks, and pattern recognition systems can correlate fiber faults, router logs, and environmental data into a single unified alert. The best NOCs practice not just reaction, but anticipation – training on simulated outage scenarios so that real-world incidents unfold like rehearsed choreography rather than crisis improvisation.

DCIM as the data center’s cognitive layer

Data Center Infrastructure Management (DCIM) systems are the cognitive frameworks that integrate power, cooling, network, and facility management. At their most advanced, DCIM platforms act as digital twins of the physical data center, mapping every device, circuit, and fiber strand as a living, data-driven model.

Integrating fiber analytics into DCIM tools marks a decisive evolution. Fiber analytics involve continuous measurement of the optical network – using technologies such as Optical Time-Domain Reflectometry (OTDR) and Distributed Temperature Sensing (DTS) – to detect degradation, splice losses, or physical stress along fiber paths.

When this data is ingested by DCIM software, each fiber record evolves from a static asset entry into a dynamic health object. The system can visualize where optical losses occur, correlate them with nearby power or cooling anomalies, and even predict failures before they cause service interruptions. A spike in attenuation along a fiber trunk can automatically generate a NOC alert, launch a ticket, and simulate service impact based on the digital twin’s topology.

Fiber network software as a hidden backbone of connectivity

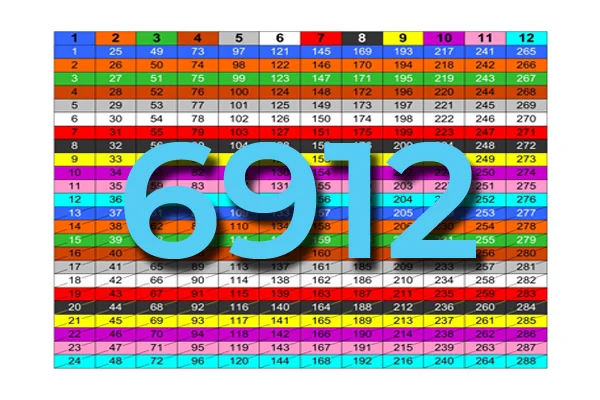

Beneath the orchestration and analytics lies the toolchain that makes fiber management possible. Dedicated fiber network software provides strand-level modeling, connection tracking, and automated patch documentation – a necessity in hyperscale environments where a single hall can contain over 100,000 individual fiber connections.

The most capable platforms support:

- Strand-level mapping, to visualize every optical path.

- Automated ingestion of OTDR and monitoring data for real-time condition tracking.

- Impact analysis, simulating the service consequences of physical changes before they occur.

- Open APIs, allowing integration with NMS, DCIM, and ITSM systems.

In practical terms, this means that a fiber break can be detected by an inline monitor, reported into the DCIM system, visualized on a floor plan, and correlated with switch logs – all within seconds, often without human intervention. Such closed-loop integration represents the future of intelligent infrastructure management.

Since the data center fiber networks inherit the topology and, vastly speaking, technology of all other types of fiber networks, like WAN, Metro and distribution, and since floor-specific networks often resemble PON topology, a widely used tool Splice.me for mapping fiber connections can be used here too.

Standards and regulatory foundations

- ANSI/TIA-942 is defining telecommunications infrastructure and cabling design for data centers.

- EN 50600 is the European reference for data center construction, availability, and sustainability.

- Uptime Institute Tier Standards establishes reliability classifications from Tier I to Tier IV.

- ISO/IEC 27001 is outlining requirements for information security management systems, crucial for multi-tenant environments.

- NIST SP 800-53 provides comprehensive security and privacy control frameworks.

- IEC 62443 guides cybersecurity for industrial and operational technology, increasingly relevant as DCIM and environmental control systems converge.

- GDPR and related privacy regulations – establishing data protection obligations for operators managing personal or customer data within their facilities.

The path toward predictive, autonomous infrastructure

As the boundary between physical and virtual infrastructure continues to blur, the next generation of data centers will operate more like living organisms – sensing, reasoning, and responding autonomously. Fiber analytics will not merely detect faults, they will predict them. DCIM systems will not just visualize assets, they will simulate changes before execution. And NOCs will evolve from monitoring centers into decision orchestration hubs, where human operators supervise AI-driven event correlation and remediation.

The ultimate vision is an adaptive, self-healing data center – one where the network continuously learns, optimizes, and protects itself. Achieving that vision demands not just better tools, but a holistic philosophy: treating the data center as an integrated cyber-physical ecosystem governed by scientific measurement, automation, and human insight.

In summary

Data center network management, NOC operations, DCIM integration, and fiber analytics are no longer separate disciplines. Together, they constitute the nervous system of the digital world – a system that must be instrumented, understood, and intelligently managed if we are to sustain the exponential growth of our connected civilization.

Take care of your splice sheets

1000+ ISPs are already saving weeks of work with Splice.me!