The world’s information flows through glass. From data centers to the last mile of urban and rural broadband, optical fiber forms the nervous system of the digital economy. Yet fiber is not infinite. Its performance is bounded by physics, its availability by planning, and its longevity by management. Beneath the illusion of boundless bandwidth lies a careful choreography of fiber bandwidth planning, fiber capacity prediction, and fiber asset management – a dance that blends physics, software, and foresight.

Bandwidth planning in fiber networks

Bandwidth planning is the art of translating uncertain demand growth into tangible physical infrastructure. It requires both engineering precision and economic intuition. The process begins with understanding not just how much data must move, but how it moves – in bursts, diurnal patterns, long-lived flows, and geographically uneven distributions.

At its heart lies the coupling of logical and physical constraints. Logical traffic forecasts describe what services the network must carry – for instance, 10 Tb/s of east–west traffic in a data-center cluster by 2028. The physical layer, however, tells you what’s possible within a strand of fiber. Fiber attenuation, chromatic dispersion, and nonlinear optical effects all limit capacity. In dense wavelength-division multiplexing (DWDM) systems, each fiber might carry 80 or more wavelengths, but amplifier gain profiles and noise determine how far those channels can go before regeneration.

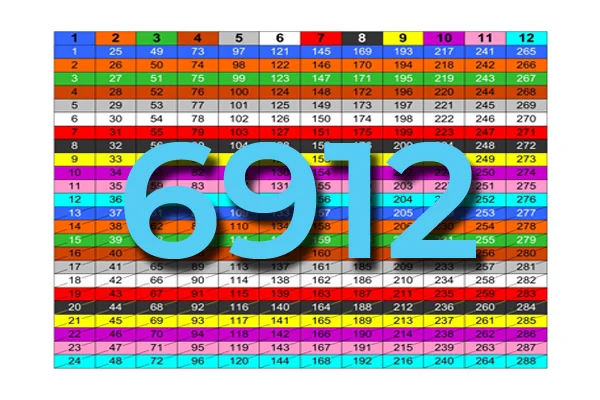

A simple numerical illustration shows the tension between growth and capacity: suppose a metro operator expects demand to grow from 500 Gb/s to 4 Tb/s in five years – roughly 45% compound annual growth. If each DWDM channel supports 100 Gb/s, the operator must light 40 channels to satisfy peak demand. With an 80-channel per-fiber system, this uses half the available spectrum. To stay within safe margins for service growth, a rule of thumb is to cap usage at 70%. That means the operator must reserve roughly 24 channels for future upgrades or risk spectrum exhaustion by year four. Such calculations drive not only capacity planning but the economics of fiber deployment and leasing.

Researches on optical network optimization have formalized these trade-offs into network-wide models that balance physical impairment, topology, and cost. Standards like ANSI/TIA-942 and EN 50600 further anchor these decisions by prescribing performance and availability tiers for modern data centers.

Predicting fiber network capacity with software

Once the physical network exists, operators face a subtler challenge: predicting when capacity will run out and where upgrades will be needed. Traditional planning relied on spreadsheets and rule-of-thumb margins. Modern software now treats the network as a dynamic system, continuously simulated and optimized.

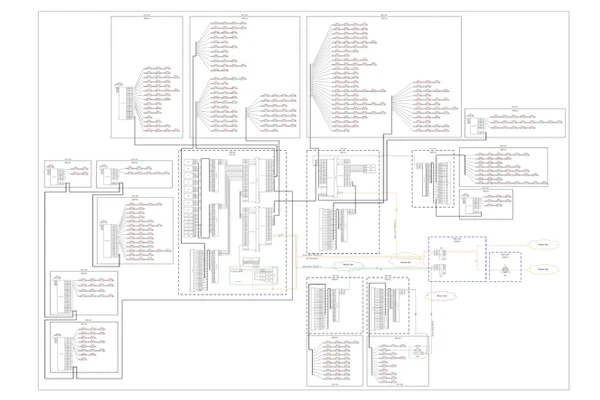

At its core, capacity prediction combines topology, optical parameters, and traffic forecasts to compute usable throughput. A digital model – or “optical digital twin” – ingests every strand length, every fiber splice, and amplifier. Algorithms then solve for the maximum feasible throughput given noise margins and modulation limits.

For example, a DWDM ring of 12 nodes and 200 km total span, with erbium-doped fiber amplifiers (EDFAs) every 40 km, might support 32 × 100 Gb/s channels under today’s QPSK modulation and 0.22 dB/km attenuation. If a software model indicates that average OSNR (optical signal-to-noise ratio) margin is 5 dB, upgrading to 200 Gb/s (using 16-QAM) could cut the margin to 1 dB – unacceptably low. The tool might then recommend either additional amplifiers or a hybrid 100/200 Gb/s mix until regeneration points are upgraded.

Artificial-intelligence approaches accelerate this process dramatically. A study showed that machine-learning models could estimate link capacity and total cost within 2% of full physical simulations, but thousands of times faster. Such tools allow fiber network planners to explore dozens of “what-if” scenarios – adding new routes, changing modulation schemes, or re-using dark fiber – in minutes instead of weeks.

Capacity-forecasting systems increasingly integrate real-time telemetry as well. By feeding live OTDR traces, signal-to-noise readings, and power-level data into predictive models, the software can detect degradation and predict when fibers will no longer meet required bit-error-rate thresholds. The emerging digital-twin paradigm for fiber optic networks extends this approach to full lifecycle simulation, merging physics-based and data-driven modeling.

Fiber asset management and network calculations

If bandwidth planning and capacity prediction represent the “brains” of the fiber network, asset management represents its memory. Without accurate documentation – which cable goes where, how many fiber strands are spliced, which connectors degrade – even the best models become abstractions.

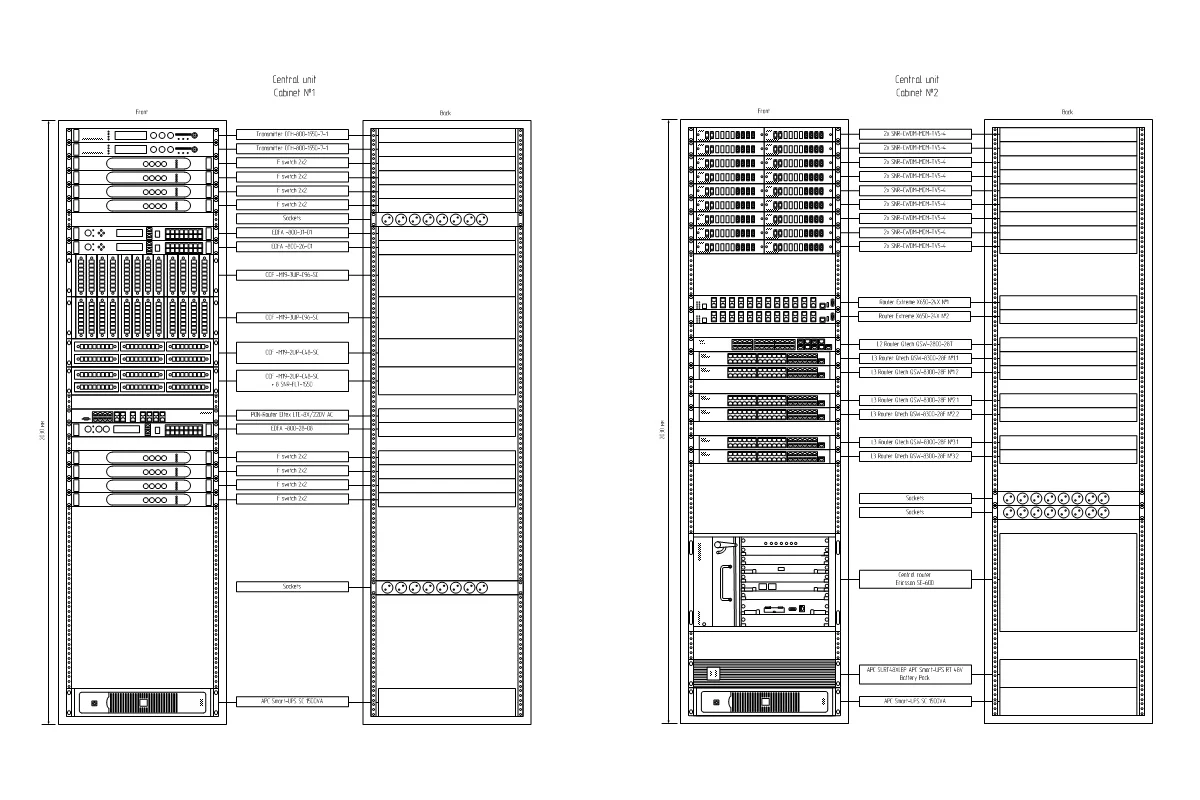

Modern fiber asset-management software functions as a living digital map of the network. Systems such as FNT, Patchmanager, 3Gis, etc., exemplify this new class of tools. They maintain strand-level inventories, geographic routing, fiber splice data, and circuit cross-connections. When integrated with DCIM or GIS platforms, they visualize entire networks down to individual connectors and ports. One systems stands out and it is called «Splice.me».

Consider a regional operator managing 100 km of duct containing 12-fiber cables. If 10 of those fibers are currently active and two are held dark, the utilization ratio is roughly 83%. Predictive asset software can forecast when utilization will exceed a safe threshold – say 90% – based on the historical rate of circuit provisioning. If the operator has been adding one new fiber pair every quarter, the tool can project that spare capacity will be exhausted within two years, prompting early procurement or construction planning.

If optical transceivers require ≤ 10 dB total budget, this link remains viable – but only barely. An automated alert might recommend cleaning connectors or inserting a mid-span amplifier to preserve margin. In large networks, such simple calculations, when automated, prevent hundreds of service degradations per year.

Beyond engineering accuracy, asset intelligence yields economic benefits. By linking physical utilization to financial depreciation, operators can tie spare fiber inventory directly to capital efficiency metrics. This bridges the gap between field technicians and financial controllers – between glass and ledger.

Integrating the layers

When bandwidth planning, capacity prediction, and asset management are treated as separate silos, the result is predictable: uncoordinated upgrades, duplicated investments, and unplanned outages. The scientific challenge of our time is integration – making these systems converse in real time.

A modern integrated workflow might begin with digital-twin asset data, feeding physical parameters into a capacity-forecasting engine. The results then flow into the planning platform, where engineers simulate traffic evolution and test alternative build-outs. Finally, NOC and DCIM systems close the feedback loop by providing live telemetry. This circular architecture turns what was once static documentation into a continuously learning model of the network.

Standards continue to evolve to support this integration. The ISO/IEC 14763-3 standard governs fiber-optic testing and measurement, while ITU-T G.694 defines DWDM grid spacing essential for bandwidth planning. These, together with cybersecurity standards like IEC 62443 and information-security frameworks like ISO/IEC 27001, form the technical and governance spine of modern fiber operations.

A glimpse of the future

The next decade will likely see (some) networks evolve toward self-optimizing optical fabrics. AI-driven models will continuously predict fiber health, trigger proactive rerouting, and suggest cost-optimal expansions. Asset-management systems will morph into autonomous registries that verify their own data against field telemetry, while DCIM and GIS systems merge into holistic spatial-temporal representations of entire infrastructures. In that world, the fiber planner’s role will not disappear but transform – from deterministic engineer to systems scientist, from spreadsheet manager to designer of feedback loops. Of course we all understand, that such systems will always be vulnerable to some human factor.

Conclusion

The beauty of a fiber network lies in its invisibility: the silent transmission of light carrying the pulse of civilization. But that silence is fragile. Sustaining it demands a fusion of physical science, predictive modeling, and rigorous asset discipline.

Bandwidth planning ensures the network’s arteries are sized for tomorrow’s load. Capacity-prediction software translates uncertain growth into quantitative foresight. Fiber asset-management systems preserve the physical truth of the network, ensuring that digital models correspond to real cables buried under cities or hanging overhead.

Further reading and standards

- Freitas, A. & Pires, J. J. O. (2024). Using Artificial Neural Networks to Evaluate the Capacity and Cost of Multi-Fiber Optical Backbone Networks. Photonics, 11(12), 1110. MDPI

- Pires, J. J. O. (2023). On the Capacity of Optical Networks. org

- Müller et al. (2023). QoT Estimation using EGN-assisted Machine Learning for Multi-period Network Planning. arXiv:2305.07332

- ANSI/TIA-942: Telecommunications Infrastructure Standard for Data Centers

- EN 50600: European Standard for Data Center Infrastructure

- ITU-T G.694: Spectral grids for DWDM systems

- ISO/IEC 14763-3: Fiber-optic testing methods and link loss measurement

- ISO/IEC 27001: Information-security management for data-center operations

- IEC 62443: Cybersecurity for industrial and infrastructure systems

Take care of your splice sheets

1000+ ISPs are already saving weeks of work with Splice.me!